Spark as a Service On Lentiq

Data analytics and machine learning at scale require scalability. By using our integrated Apache Spark -as-a-Service, you can run, optimize and scale any type of batch, real-time, data processing or machine learning workload. No need for fancy technical knowledge. We automated that behind the scenes.

There's a limit to your love, not to Spark scaling.

Spark-as-a-Service running on Lentiq is quick and easy to set up. You get instant access to a fully optimized environment so you can instantly run data science projects. It's fact-based and proven.

Each project you start in a data pool can have multiple, dedicated Spark clusters to handle the specific workloads. Meanwhile, in the background, Kubernetes does the techy part and manages everything, so you don't have to.

Connect notebooks to an existent Spark cluster through a one liner and accelerate your data science, machine learning and data processing tasks.

Connecting to a Spark cluster pre-created in the data pool.

from pyspark.sql import SparkSession

spark = SparkSession.builder\

.master(”spark://35.228.151.102:7077")\

.getOrCreate()An existing Spark cluster is required. Click on the Spark icon in the Lentiq interface or provision one. If Spark will not be used for processing a minimal resources configuration can be used. Copy the Spark master URL from the widget and use below as an argument to the master function.

By using Spark under the hood with Jupyter Notebooks, you can maintain the IDE you love and benefit from large scale processing when needed, as well as easily scale with the dataset.

Package your notebooks as executable code ready to be put in production, through our unique "Reusable Code Blocks" technology. This can help you automate your data science workflow.

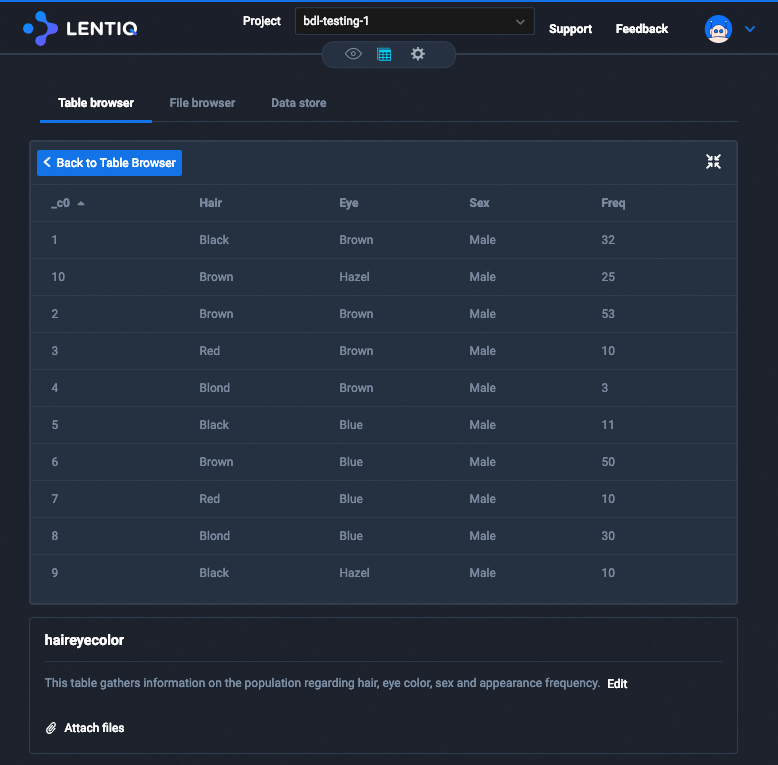

Lentiq's Spark -as-a-Service is seamlessly integrated with the rest of the platform. Creating tables results in having them available for data documentation in the Table Browser page or for standard BI tools through the JDBC connector.