Connecting to Spark from a notebook

In Lentiq applications can be interconnected seamlessly. In this guide we are going to explore how a notebook can be connected with a Spark cluster when it is needed to scale data science tasks.

Prerequisites

There are some prerequisites for this:

- An up and running data pool

- An up and running project

- An up and running Jupyter Notebook instance

- An up and running Spark cluster

How to connect a notebook to a Spark cluster

Once all the prerequisites are in place, follow the next steps.

- Connect to the Jupyter Notebook instance. Use the URL and password provided in the interface.

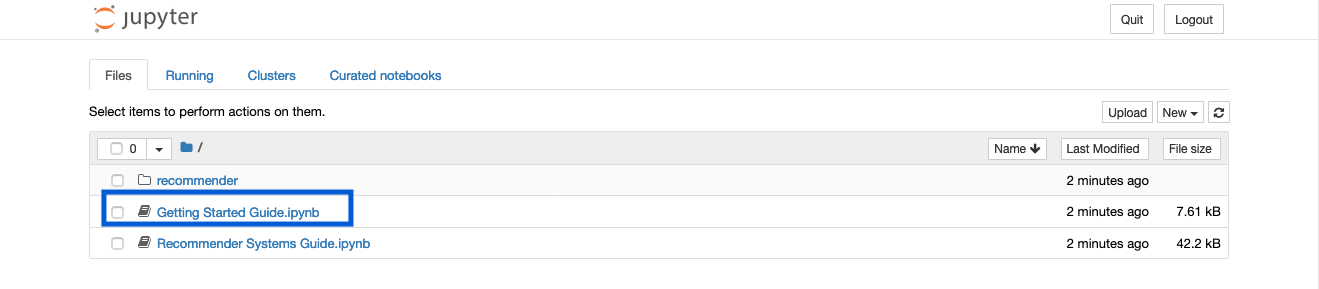

Create a new notebook or enter the Getting Started Guide notebook.

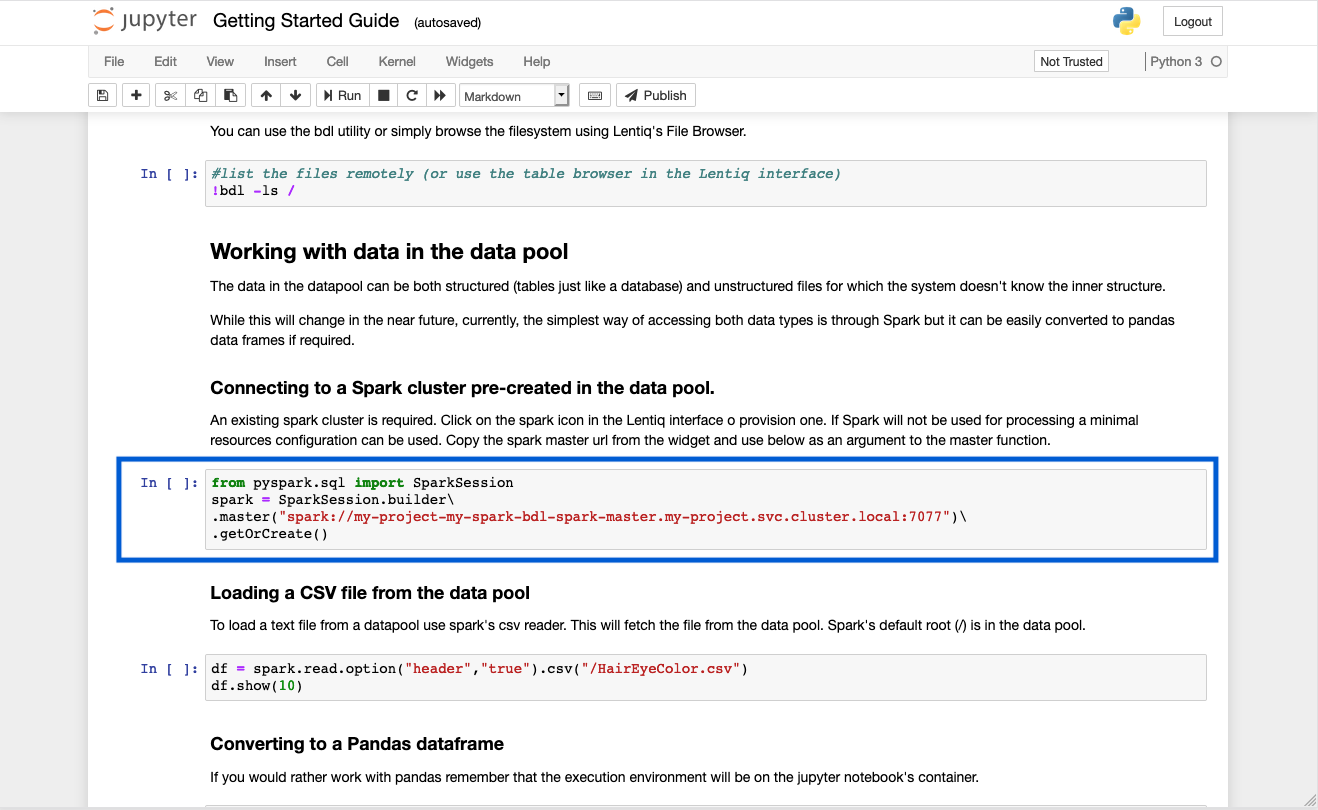

Add a new cell where you will configure connection to a Spark cluster or identify the Spark connection cell in the Getting Started Guide notebook.

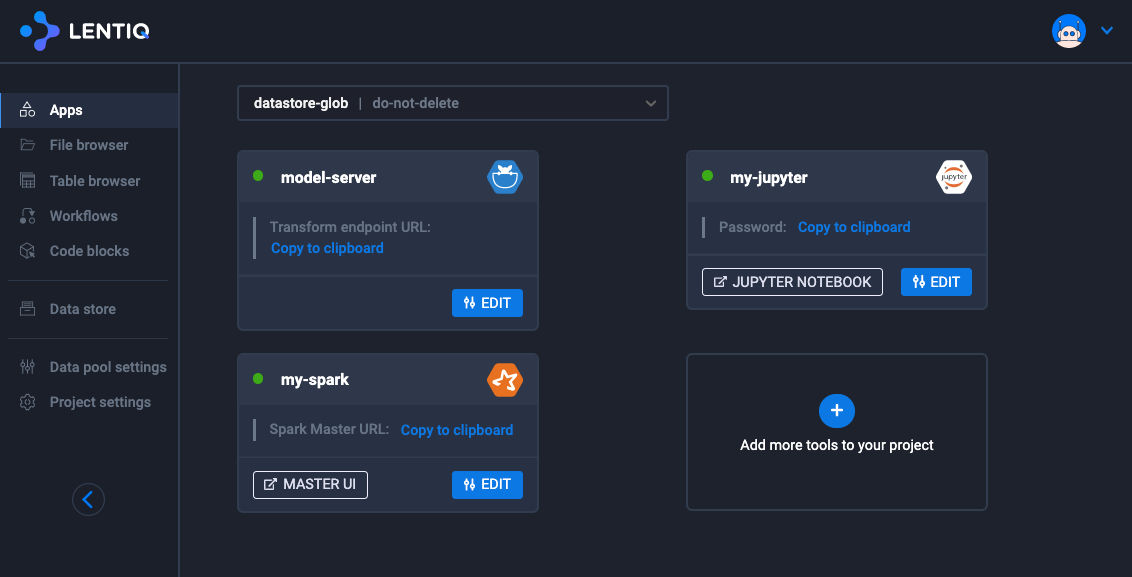

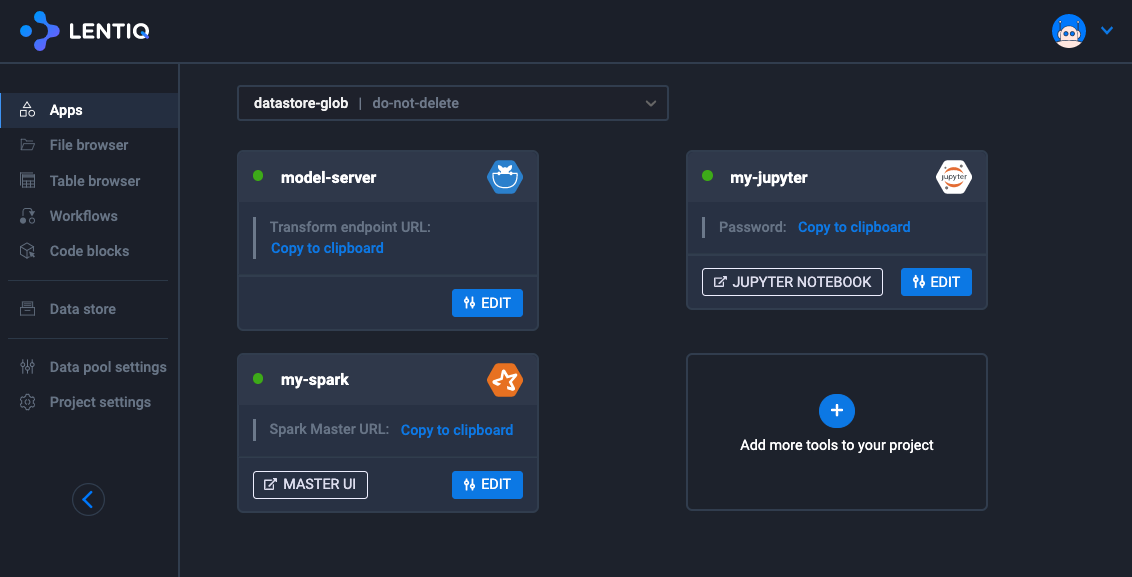

Now copy the Spark Master connection URL. You can find it in the Application Management view in the Spark cluster application card.

Enter the Spark Master connection URL in the newly created cell or in the Spark connection cell in the Getting Started Guide notebook.

Run the cell by hitting Shift+Enter.

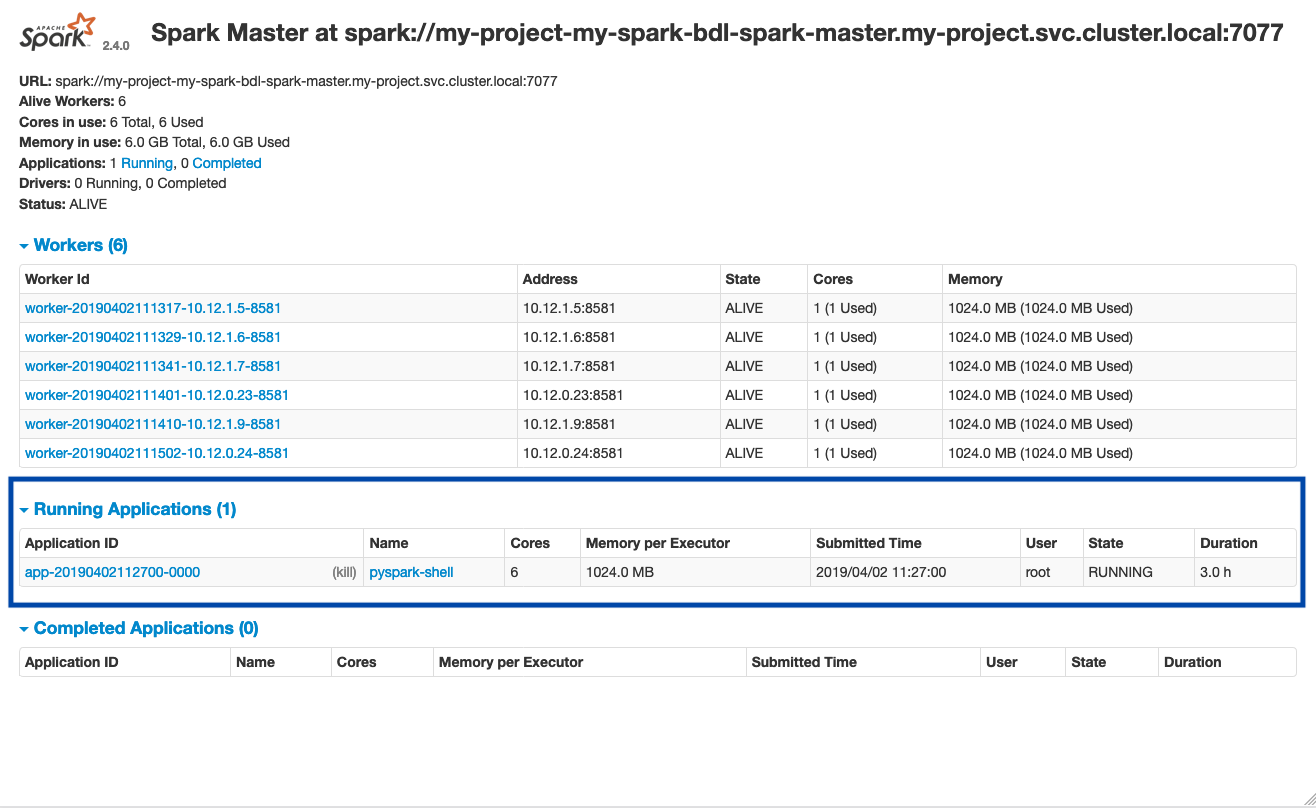

Wait for the cell to be run and check the Spark Master Web UI to see that the application is registered.